.png)

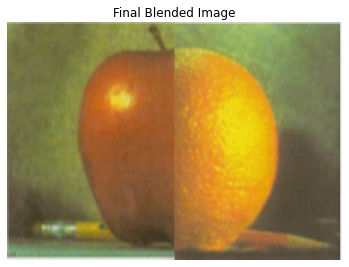

This project investigates various techniques for using frequencies to process and combine images in unique ways. Images can be sharpened by filtering and amplifying its higher frequencies. Finite difference kernels can be applied to extract edges. Hybrid images are created by merging the high-frequency components of one image with the low-frequency elements of another. Additionally, images can be blended at different frequency levels using Gaussian and Laplacian pyramids.

In this part, I built intuitions about 2D convolutions and filtering.

Gradient magnitude computation is a technique used in image processing to measure the strength of changes (gradients) in pixel intensity, typically indicating edges or transitions within an image. It involves calculating the gradient of the image in both horizontal and vertical directions (usually using Sobel or finite difference operators) and then combining these to determine the overall magnitude at each pixel. The gradient magnitude is typically computed as the square root of the sum of the squares of the horizontal and vertical gradients, highlighting regions with significant intensity changes.

Here are the results of convolving the cameraman image with the finite difference operators Dx and Dy:

The edge image can be further refined by adjusting the threshold value to better balance noise suppression and edge detection.

.png)

In this task, applying a Gaussian filter before the difference operation serves to smooth the image and reduce noise. When using just the difference operator (e.g., the finite difference), the high-frequency components (such as sharp edges and noise) are amplified, which can result in a noisy output. By first applying a Gaussian filter, we blur the image slightly, which smooths out the noise while preserving important edges.

The key difference is that the Gaussian-smoothed gradient produces cleaner and less noisy results compared to the direct application of the difference operator. Edges appear more defined, while the unwanted noise and high-frequency details are reduced. This results in an overall more visually appealing gradient, with edges more clearly distinguishable. The Gaussian filter effectively suppresses small, abrupt intensity changes due to noise, which helps in producing a clearer representation of the image's true structure.

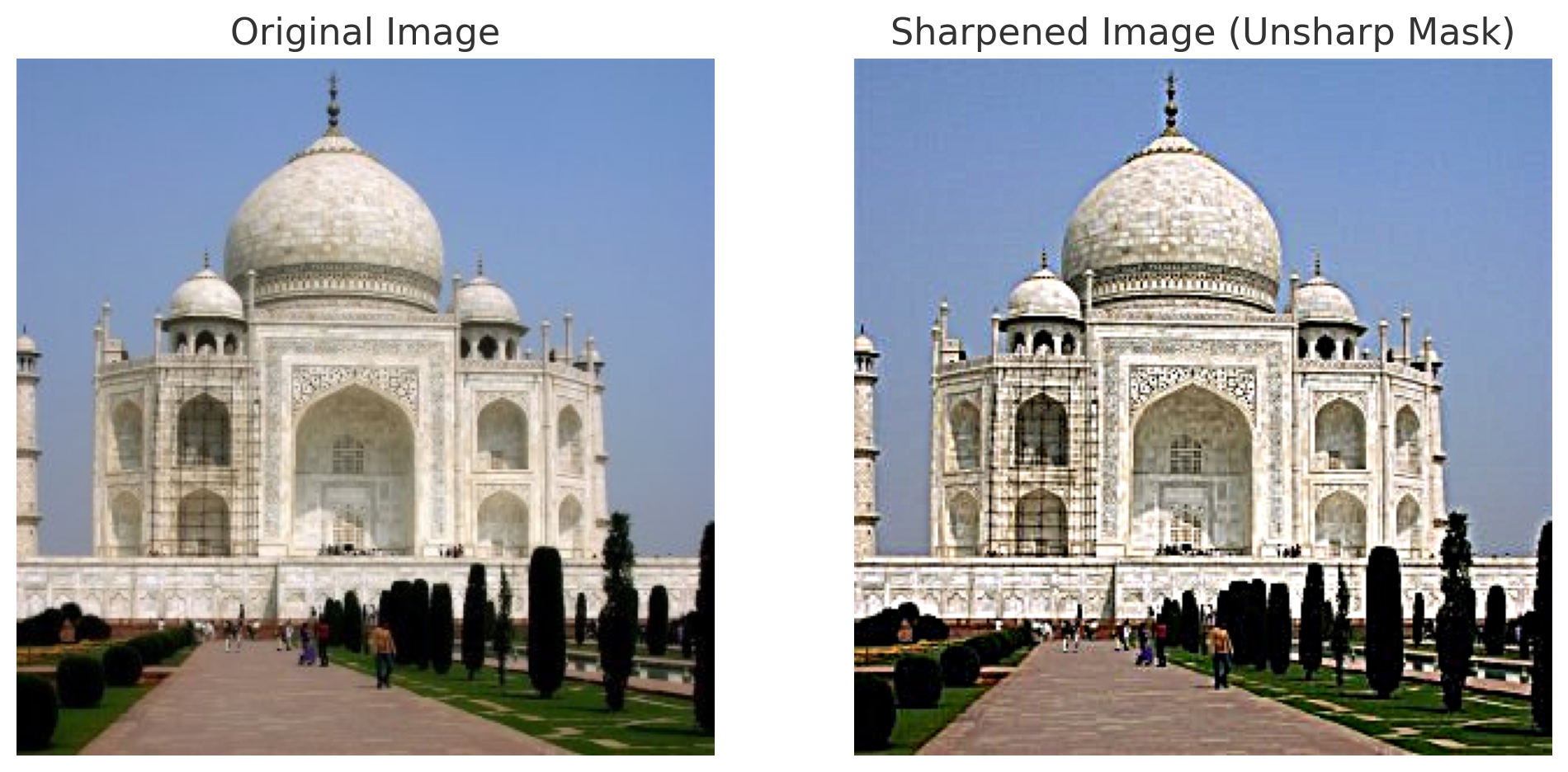

The written code aims to sharpen an image using an unsharp masking technique. The process involves several key steps:

cv2.getGaussianKernel() function, generating a 1D Gaussian kernel. The outer product of this kernel with its transpose creates a 2D Gaussian filter.

This approach ensures that the sharpening process enhances important details while controlling noise, resulting in a visually cleaner output.

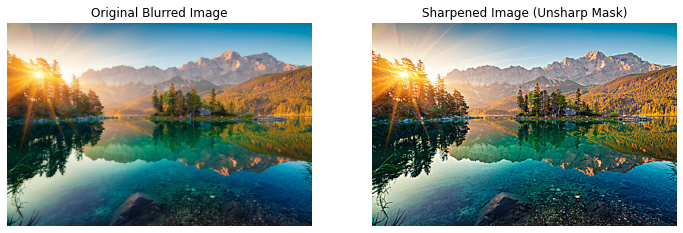

Below, we can see the result after blurring a sharpened image of a waterfall, and resharpening it after. The resharpened image does a good job in recovering most of the lost information.

The goal of this part of the assignment is to create hybrid images using the approach described in the SIGGRAPH 2006 paper by Oliva, Torralba, and Schyns. Hybrid images are static images that change in interpretation as a function of the viewing distance. The basic idea is that high frequency tends to dominate perception when it is available, but, at a distance, only the low frequency (smooth) part of the signal can be seen. By blending the high frequency portion of one image with the low-frequency portion of another, you get a hybrid image that leads to different interpretations at different distances.

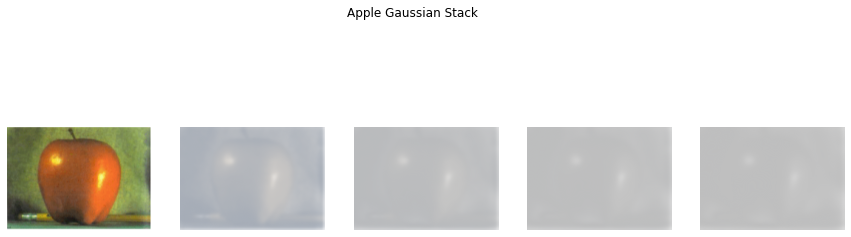

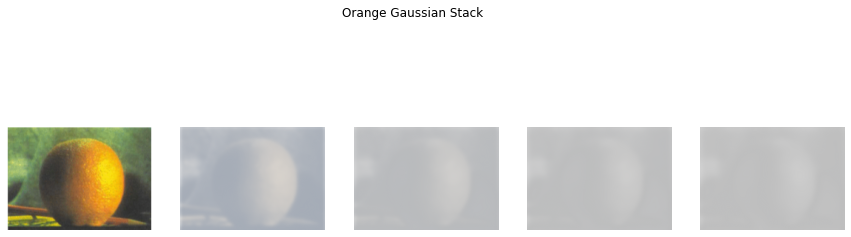

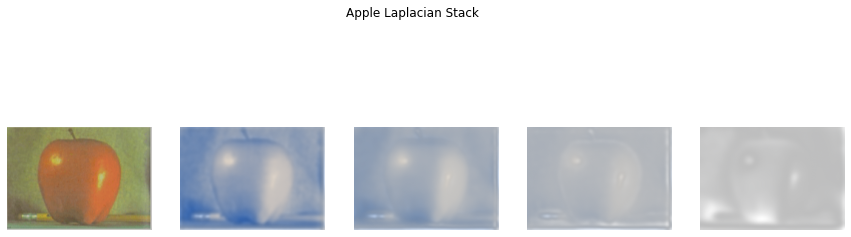

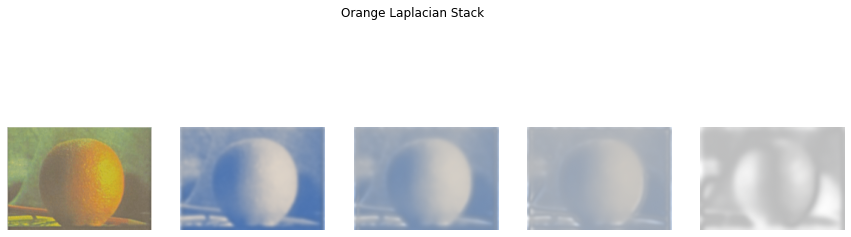

Below is the result of applying the Laplacian Stack to get teh Oraple